Narrative Learning studies the iterative training of reasoning models that explain their answers.

This site serves as an observatory to track progress and compare ensembles to traditional explainable models.

| Model | espionage | potions | southgermancredit | timetravel_insurance | titanic | wisconsin |

|---|---|---|---|---|---|---|

| Logistic regression | 0.166 | 0.249 | 0.192 | 0.249 | 0.137 | 0.080 |

| Decision trees | 0.166 | 0.166 | 0.289 | 0.340 | 0.166 | 0.070 |

| Dummy | 0.500 | 0.500 | 0.161 | 0.635 | 0.335 | 0.410 |

| RuleFit | 0.207 | 0.207 | 0.149 | 0.293 | 0.130 | 0.090 |

| Bayesian Rule List | 0.081 | 0.500 | 0.827 | 0.389 | 0.481 | 0.142 |

| CORELS | 0.166 | 0.500 | 0.161 | 0.207 | 0.152 | 0.196 |

| EBM | 0.166 | 0.293 | 0.167 | 0.293 | 0.116 | 0.070 |

| Most recent successful narrative learning ensemble | 0.071 | 0.126 | 0.167 | 0.098 | 0.106 | 0.049 |

| Model | espionage | potions | southgermancredit | timetravel_insurance | titanic | wisconsin |

|---|---|---|---|---|---|---|

| Logistic regression | 0.683 | 0.563 | 0.643 | 0.563 | 0.729 | 0.832 |

| Decision trees | 0.683 | 0.683 | 0.514 | 0.457 | 0.682 | 0.852 |

| Dummy | 0.315 | 0.315 | 0.691 | 0.231 | 0.463 | 0.389 |

| RuleFit | 0.621 | 0.621 | 0.710 | 0.509 | 0.741 | 0.813 |

| Bayesian Rule List | 0.832 | 0.315 | 0.149 | 0.408 | 0.330 | 0.721 |

| CORELS | 0.683 | 0.315 | 0.691 | 0.621 | 0.705 | 0.636 |

| EBM | 0.683 | 0.509 | 0.681 | 0.509 | 0.765 | 0.852 |

| Most recent successful narrative learning ensemble | 0.850 | 0.750 | 0.681 | 0.800 | 0.784 | 0.894 |

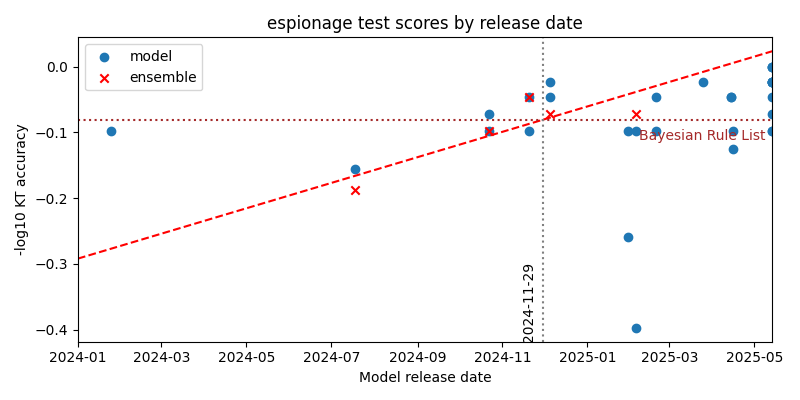

Slope 0.000632, intercept -12.7634, p=0.066381

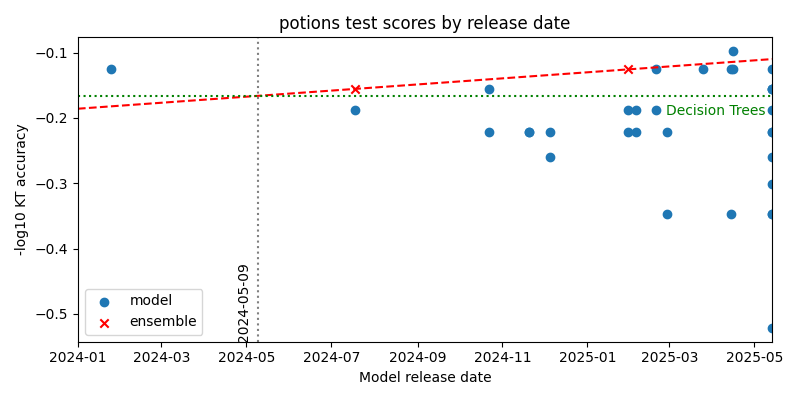

Slope 0.000152, intercept -3.1906, p=0

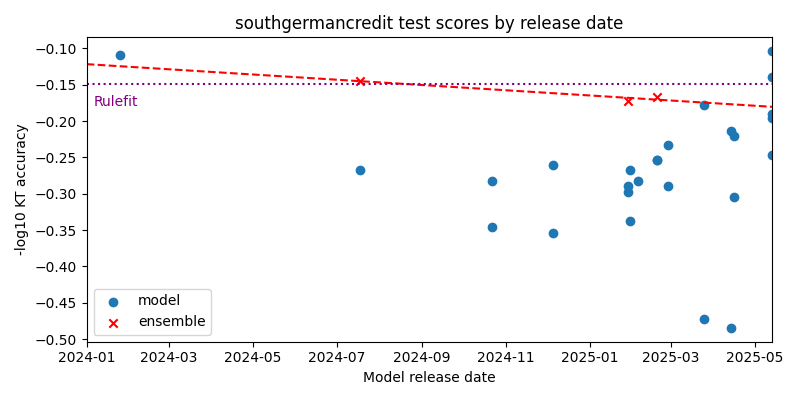

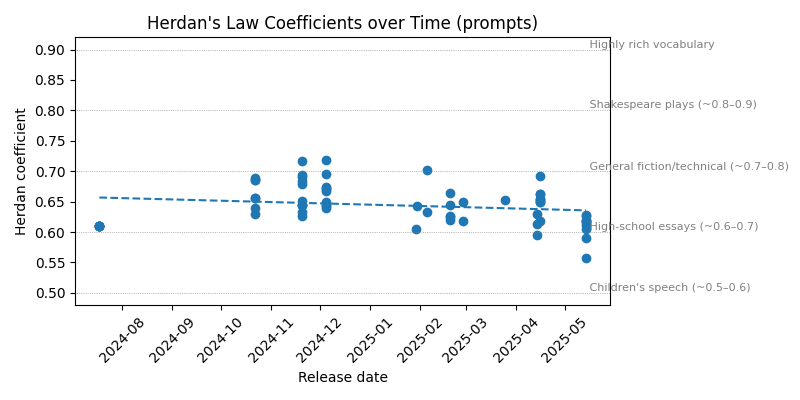

Slope -0.000118, intercept 2.2000, p=0.18062

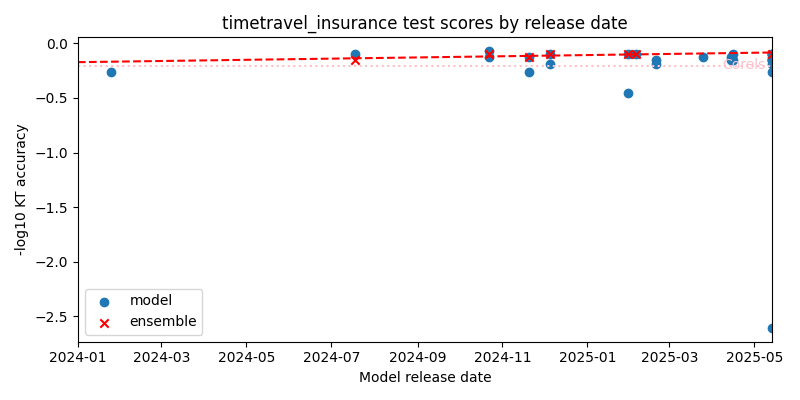

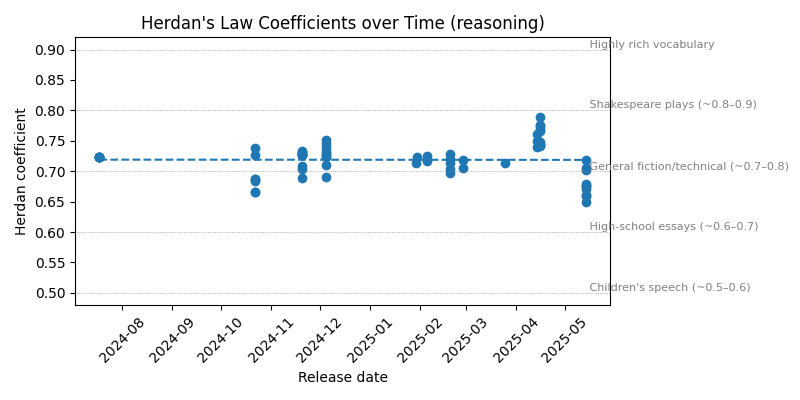

Slope 0.000177, intercept -3.6697, p=0.05732

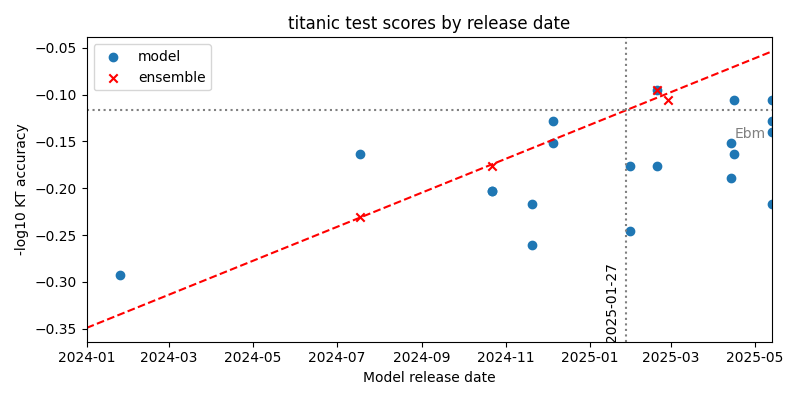

Slope 0.000593, intercept -12.0403, p=0.0051174

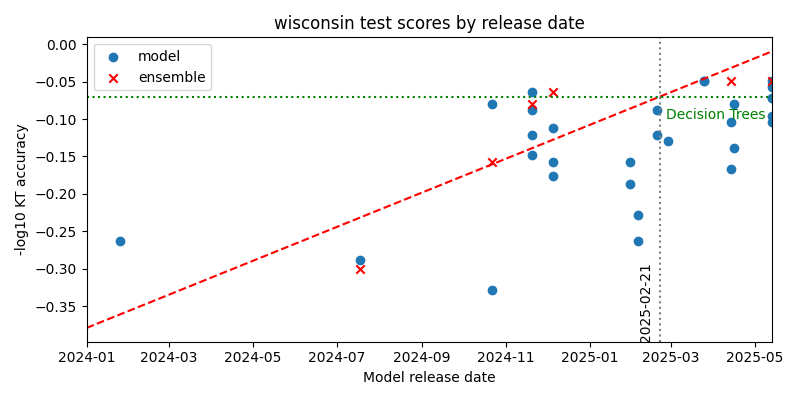

Slope 0.000742, intercept -15.0071, p=0.035988

Slope -0.000070, intercept 0.6568, p=0.10183

Slope -0.000001, intercept 0.7190, p=0.97455

Datasets | Models | Lexicostatistics | Ensembles | Incomplete